NTT and the University of Tokyo Develop World’s First Optical Computing AI Using an Algorithm Inspired by the Human Brain

Collaboration advances the practical application of low-power, high-speed AI based on optical computing

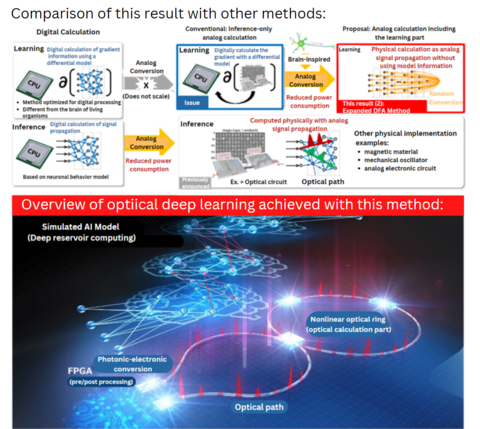

Figure 1: (Top) Comparison of this result with other methods. (Bottom) Overview of optical deep learning achieved with this method. (Graphic: Business Wire)

Researchers achieved the world’s first demonstration of efficiently executed optical DNN learning by applying the algorithm to a DNN that uses optical analog computation, which is expected to enable high-speed, low-power machine learning devices. In addition, they have achieved the world’s highest performance of a multi-layered artificial neural network that uses analog operations.

In the past, high-load learning calculations were performed by digital calculations, but this result proves that it is possible to improve the efficiency of the learning part by using analog calculations. In Deep Neural Network (DNN) technology, a recurrent neural network called deep reservoir computing is calculated by assuming an optical pulse as a neuron and a nonlinear optical ring as a neural network with recursive connections. By re-inputting the output signal to the same optical circuit, the network is artificially deepened.

DNN technology enables advanced artificial intelligence (AI) such as machine translation, autonomous driving and robotics. Currently, the power and computation time required is increasing at a rate that exceeds the growth in the performance of digital computers. DNN technology, which uses analog signal calculations (analog operations), is expected to be a method of realizing high-efficiency and high-speed calculations similar to the neural network of the brain. The collaboration between

The proposed method learns by changing the learning parameters based on the final layer of the network and the nonlinear random transformation of the error of the desired output signal (error signal). This calculation makes it easier to implement analog calculations in things such as optical circuits. It can also be used not only as a model for physical implementation, but also as a cutting-edge model used in applications such as machine translation and various AI models, including the DNN model. This research is expected to contribute to solving emerging problems associated with AI computing, including power consumption and increased calculation time.

In addition to examining the applicability of the method proposed in this paper to specific problems,

Support for this Research:

JST/CREST supported part of these research results.

Magazine Publication:

Magazine:

Article Title: Physical

Authors:Mitsumasa Nakajima,

Explanation of Terminology:

- Optical circuit: A circuit in which silicon or quartz optical waveguides are integrated onto a silicon wafer using electronic circuit manufacturing technology. In communication, the branching and merging of optical communication paths are performed by optical interference, wavelength multiplexing/demultiplexing, and the like.

- Backpropagation (BP) method: The most commonly used learning algorithm in deep learning. Gradients of weights (parameters) in the network are obtained while propagating the error signal backward, and the weights are updated so that the error becomes smaller. Since the backpropagation process requires transposition of the weight matrix of the network model and nonlinear differentiation, it is difficult to implement on analog circuits, including the brain of a living organism.

- Analog computing: A computer that expresses real values using physical quantities such as the intensity and phase of light and the direction and intensity of magnetic spins and performs calculations by changing these physical quantities according to the laws of physics.

- Direct feedback alignment (DFA) method: A method of pseudo-calculating the error signal of each layer by performing a nonlinear random transformation on the error signal of the final layer. Since it does not require differential information of the network model and can be calculated only by parallel random transformation, it is compatible with analog calculation.

- Reservoir computing: A type of recurrent neural network with recurrent connections in the hidden layer. It is characterized by randomly fixing connections in an intermediate layer called a reservoir layer. In deep reservoir computing, information processing is performed by connecting reservoir layers in multiple layers.

View source version on businesswire.com: https://www.businesswire.com/news/home/20230208005258/en/

For

+1-804-362-7484

srussell@wireside.com

Source: