Nebius AI Studio: a high-performing Inference-as-a-Service platform recognized for cost efficiency

Nebius AI Studio has been recognized as one of the most competitive Inference-as-a-Service platforms by Artificial Analysis. The platform offers access to leading open-source models including Llama 3.1 and Mistral families, with per-token pricing for affordable application development.

Key features include pricing up to 50% lower than major competitors, batch processing of up to 5 million requests per file, support for file sizes up to 10 GB, and throughput capacity of 10 million tokens per minute. The platform's Llama-3.1-405B model offers GPT-4 comparable performance at lower cost, with endpoints hosted in Finland and Paris, and upcoming expansion to the U.S.

Nebius AI Studio è stato riconosciuto come una delle piattaforme più competitive di Inference-as-a-Service da Artificial Analysis. La piattaforma offre accesso a modelli open-source di primo livello, tra cui le famiglie Llama 3.1 e Mistral, con una tariffazione per token per uno sviluppo applicativo economico.

Le caratteristiche principali includono prezzi fino al 50% inferiori rispetto ai principali concorrenti, elaborazione in batch di fino a 5 milioni di richieste per file, supporto per dimensioni di file fino a 10 GB e capacità di throughput di 10 milioni di token al minuto. Il modello Llama-3.1-405B della piattaforma offre prestazioni comparabili a GPT-4 a un costo inferiore, con endpoint ospitati in Finlandia e Parigi, e una prossima espansione negli Stati Uniti.

Nebius AI Studio ha sido reconocido como una de las plataformas de Inference-as-a-Service más competitivas por Artificial Analysis. La plataforma ofrece acceso a modelos open-source líderes, incluyendo las familias Llama 3.1 y Mistral, con precios por token para un desarrollo de aplicaciones asequible.

Las características clave incluyen precios hasta un 50% más bajos que los principales competidores, procesamiento por lotes de hasta 5 millones de solicitudes por archivo, soporte para archivos de hasta 10 GB y una capacidad de procesamiento de 10 millones de tokens por minuto. El modelo Llama-3.1-405B de la plataforma ofrece un rendimiento comparable a GPT-4 a un costo menor, con endpoints alojados en Finlandia y París, y una próxima expansión a EE. UU.

Nebius AI Studio는 Artificial Analysis에 의해 가장 경쟁력 있는 Inference-as-a-Service 플랫폼 중 하나로 인정받았습니다. 이 플랫폼은 Llama 3.1 및 Mistral 계열을 포함한 주요 오픈 소스 모델에 대한 접근을 제공하며, 저렴한 애플리케이션 개발을 위한 토큰 기반 가격 책정을 제공합니다.

주요 기능으로는 주요 경쟁사보다 50% 낮은 가격, 파일당 최대 500만 요청의 배치 처리, 최대 10GB의 파일 크기 지원, 분당 1000만 토큰의 처리 용량이 포함됩니다. 플랫폼의 Llama-3.1-405B 모델은 GPT-4와 비교할 만한 성능을 제공하며, 비용이 더 저렴하고, 핀란드와 파리에 호스팅된 엔드포인트, 미국으로의 확장이 예정되어 있습니다.

Nebius AI Studio a été reconnu comme l'une des plateformes d'Inference-as-a-Service les plus compétitives par Artificial Analysis. La plateforme propose l'accès à des modèles open-source de premier plan, y compris les familles Llama 3.1 et Mistral, avec une tarification par jeton pour un développement d'applications abordable.

Les caractéristiques clés comprennent des prix jusqu'à 50 % inférieurs à ceux des principaux concurrents, le traitement par lots de jusqu'à 5 millions de requêtes par fichier, le support pour des tailles de fichiers allant jusqu'à 10 Go, et une capacité de traitement de 10 millions de jetons par minute. Le modèle Llama-3.1-405B de la plateforme offre des performances comparables à celles de GPT-4 à un coût inférieur, avec des points de terminaison hébergés en Finlande et à Paris, et une expansion à venir aux États-Unis.

Nebius AI Studio wurde von Artificial Analysis als eine der wettbewerbsfähigsten Inference-as-a-Service-Plattformen anerkannt. Die Plattform bietet Zugang zu führenden Open-Source-Modellen, darunter die Llama 3.1 und Mistral-Familien, mit einer Abrechnung pro Token für die kostengünstige Entwicklung von Anwendungen.

Zu den Hauptmerkmalen gehören Preise, die bis zu 50% niedriger sind als bei großen Wettbewerbern, die Batch-Verarbeitung von bis zu 5 Millionen Anfragen pro Datei, Unterstützung für Dateigrößen von bis zu 10 GB und eine Durchsatzkapazität von 10 Millionen Tokens pro Minute. Das Modell Llama-3.1-405B der Plattform bietet eine Leistung, die mit GPT-4 vergleichbar ist, jedoch zu geringeren Kosten, mit Endpunkten, die in Finnland und Paris gehostet werden, sowie einer bevorstehenden Expansion in die USA.

- None.

- None.

Nebius AI Studio offers app builders access to an extensive and constantly growing library of leading open-source models – including the Llama 3.1 and Mistral families, Nemo, Qwen, and Llama OpenbioLLM, as well as upcoming text-to-image and text-to-video models – with per - token pricing, enabling the creation of fast, low-latency applications at an affordable price.

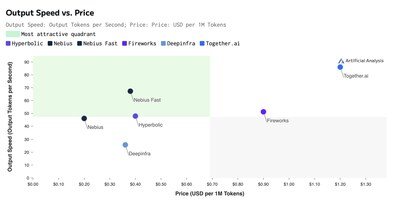

Artificial Analysis assessed key metrics including quality, speed, and price across all endpoints on the Nebius Studio AI platform. The results revealed that the open-source models available on Nebius AI Studio deliver one of the most competitive offerings in the market, with the Llama 3.1 Nemotron 70B and Qwen 2.5 72B models in the most attractive quadrant of the Output Speed vs. Price chart.

Photo - https://mma.prnewswire.com/media/2568823/Nebius.jpg

Source: https://artificialanalysis.ai/providers/nebius

Roman Chernin, co-founder and Chief Business Officer at Nebius, said:

"Nebius AI Studio represents the logical next step in expanding Nebius's offering to service the explosive growth of the global AI industry.

Our approach is unique because it combines our robust infrastructure and powerful GPU capabilities. The vertical integration of our inference-as-a-service offering on top of our full-stack AI infrastructure ensures seamless performance and enables us to offer optimized, high-performance services across all aspects of our platform. The results confirmed by Artificial Analysis's benchmarking are a testament to our commitment to delivering a competitive offering in terms of both price and performance, setting us apart in the market."

Artificial Analysis evaluates the end-to-end performance of LLM inference services such as Nebius AI Studio on the real-world experience of customers to provide benchmarks for AI model users. Nebius' endpoints are hosted in data centers located in .S and adding offices nationwide to serve customers across the country.

George Cameron, co-founder of Artificial Analysis, added:

"In our independent benchmarks of Nebius' endpoints, results show they are amongst the most competitive in terms of price. In particular, Nebius is offering Llama 3.1 405B, Meta's leading model, at

Nebius AI Studio unlocks a broad spectrum of possibilities, offering flexibility for customers and partners across industries, from healthcare to entertainment and design. Its versatile capabilities empower diverse GenAI builders to tailor solutions that meet the needs of each sector in the market.

Key features of Nebius AI Studio include:

- Higher value at a competitive price. The platform's pricing is up to

50% lower than other big-name providers, offering unparalleled cost-efficiency for GenAI builders - Batch inference processing power: the platform enables processing of up to 5 million requests per file –

-a hundred-fold increase over industry norms –-while supporting massive file sizes up to 10 GB. Gen AI app builders are equipped to process entire datasets with support for 500 files per user simultaneously for large scale AI operations. - Open source model access: Nebius AI Studio supports a wide range of cutting-edge open-source AI models including the Llama and Mistral families, as well as specialist LLMs such as OpenbioLLM. Nebius' flagship hosted model, Meta's Llama-3.1-405B, offers performance comparable to GPT-4 at far lower cost.

- High rate limits: Nebius AI Studio offers up to 10 million tokens per minute (TPM), with additional headroom for scaling based on workload demands. This robust throughput capacity ensures consistent performance, even during peak demand, allowing AI builders to handle large volumes of real-time predictions with ease and efficiency.

- User friendly interface: The Playground side- by- side comparison feature allows builders to test and compare different models without writing code, view APIs code to achieve seamless integration, and adjust generation parameters to fine-tune outputs

- Dual-flavor approach: Nebius AI Studio offers a dual-flavor approach for optimizing performance and cost. The fast flavor delivers blazing speed for real-time applications, while the base flavor maximizes cost-efficiency for less time-sensitive tasks.

For more information, please visit our blog:

Introducing Nebius AI Studio: Achieve fast, flexible inference today.

About Nebius

Nebius (NASDAQ: NBIS) is a technology company building full-stack infrastructure to service the explosive growth of the global AI industry, including large-scale GPU clusters, cloud platforms, and tools and services for developers. Headquartered in

Nebius's core business is an AI-centric cloud platform built for intensive AI workloads. With proprietary cloud software architecture and hardware designed in-house (including servers, racks and data center design), Nebius gives AI builders the compute, storage, managed services and tools they need to build, tune and run their models.

A Preferred cloud service provider in the NVIDIA Partner Network, Nebius offers high-end infrastructure optimized for AI training and inference. The company boasts a team of over 500 skilled engineers, delivering a true hyperscale cloud experience tailored for AI builders.

To learn more please visit www.nebius.com

About Artificial Analysis

Artificial Analysis is an independent analysis company for AI models and API providers.

Artificial Analysis provides objective benchmarks and information to support developers, customers, researchers, and other users of AI models to make informed decisions in choosing the best AI models for their use case.

https://artificialanalysis.ai/

Logo - https://mma.prnewswire.com/media/2531117/5051018/Nebius_Logo.jpg

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/nebius-ai-studio-a-high-performing-inference-as-a-service-platform-recognized-for-cost-efficiency-302317788.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/nebius-ai-studio-a-high-performing-inference-as-a-service-platform-recognized-for-cost-efficiency-302317788.html

SOURCE Nebius