New IBM Processor Innovations To Accelerate AI on Next-Generation IBM Z Mainframe Systems

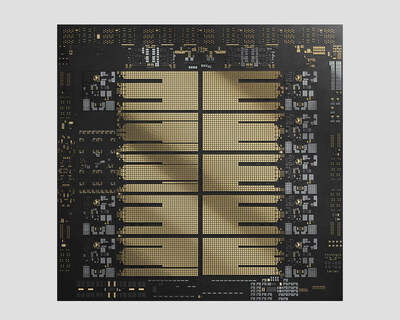

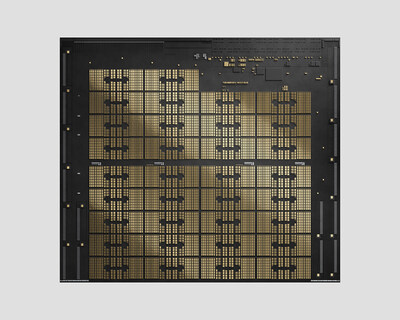

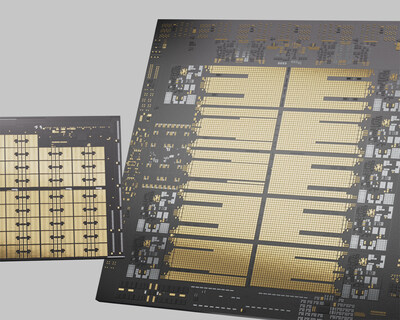

IBM unveiled architecture details for the upcoming IBM Telum II Processor and IBM Spyre Accelerator at Hot Chips 2024. These innovations are designed to scale processing capacity across next-generation IBM Z mainframe systems, accelerating traditional AI models and Large Language Models (LLMs) through a new ensemble method of AI. The Telum II Processor features increased frequency, memory capacity, and a 40% growth in cache compared to its predecessor. The Spyre Accelerator provides additional AI compute capability, forming a scalable architecture to support ensemble AI modeling. Both will be manufactured by Samsung Foundry on a 5nm process node and are expected to be available to clients in 2025.

IBM ha svelato i dettagli dell'architettura del prossimo IBM Telum II Processor e IBM Spyre Accelerator durante il Hot Chips 2024. Queste innovazioni sono progettate per scalare la capacità di elaborazione nei sistemi mainframe di nuova generazione IBM Z, accelerando i modelli AI tradizionali e i modelli di linguaggio di grandi dimensioni (LLMs) attraverso un nuovo metodo di ensemble AI. Il Telum II Processor presenta una frequenza aumentata, una maggiore capacità di memoria e una crescita del 40% della cache rispetto al suo predecessore. Il Spyre Accelerator offre ulteriore capacità di calcolo per l'AI, formando un'architettura scalabile per supportare la modellazione AI di ensemble. Entrambi saranno prodotti da Samsung Foundry con un processo a 5nm e si prevede siano disponibili per i clienti nel 2025.

IBM reveló los detalles de la arquitectura del próximo IBM Telum II Processor y IBM Spyre Accelerator en Hot Chips 2024. Estas innovaciones están diseñadas para escalar la capacidad de procesamiento en los sistemas mainframe de nueva generación IBM Z, acelerando los modelos de IA tradicionales y los Modelos de Lenguaje Grande (LLMs) mediante un nuevo método de conjunto de IA. El Telum II Processor presenta una frecuencia aumentada, mayor capacidad de memoria y un crecimiento del 40% en caché en comparación con su predecesor. El Spyre Accelerator proporciona capacidad adicional de cálculo de IA, formando una arquitectura escalable para soportar la modelización de IA en conjunto. Ambos serán fabricados por Samsung Foundry en un nodo de proceso de 5nm y se espera que estén disponibles para los clientes en 2025.

IBM은 Hot Chips 2024에서 다가오는 IBM Telum II Processor 및 IBM Spyre Accelerator에 대한 아키텍처 세부정보를 공개했습니다. 이러한 혁신은 차세대 IBM Z 메인프레임 시스템에서 처리 용량을 확장하도록 설계되었으며, 새로운 AI 앙상블 방법을 통해 전통적인 AI 모델 및 대형 언어 모델(LLMs)을 가속화합니다. Telum II Processor는 이전 모델에 비해 주파수, 메모리 용량이 증가하고 캐시에서 40% 성장했습니다. Spyre Accelerator는 추가적인 AI 계산 능력을 제공하여 앙상블 AI 모델링을 지원하는 확장 가능한 아키텍처를 형성합니다. 두 제품 모두 삼성 파운드리에서 5nm 프로세스 노드로 제조되며, 2025년에 고객에게 제공될 것으로 예상됩니다.

IBM a dévoilé les détails de l'architecture du prochain IBM Telum II Processor et IBM Spyre Accelerator lors de Hot Chips 2024. Ces innovations sont conçues pour augmenter la capacité de traitement des systèmes mainframe de nouvelle génération IBM Z, en accélérant les modèles d’IA traditionnels et les grands modèles de langage (LLMs) grâce à une nouvelle méthode d'ensemble de l'IA. Le Telum II Processor présente une fréquence augmentée, une capacité de mémoire accrue et une croissance de 40% de la mémoire cache par rapport à son prédécesseur. Le Spyre Accelerator offre une capacité de calcul supplémentaire pour l’IA, formant une architecture évolutive pour supporter la modélisation de l'IA en ensemble. Les deux produits seront fabriqués par Samsung Foundry sur un nœud de process de 5nm et devraient être disponibles pour les clients en 2025.

IBM hat auf den Hot Chips 2024 Architekturdetails für den kommenden IBM Telum II Processor und IBM Spyre Accelerator vorgestellt. Diese Innovationen sind darauf ausgelegt, die Verarbeitungskapazität in den nächsten IBM Z Mainframe-Systemen zu skalieren und traditionelle KI-Modelle sowie große Sprachmodelle (LLMs) durch eine neue Ensemble-Methode der KI zu beschleunigen. Der Telum II Processor bietet eine erhöhte Frequenz, eine größere Speicherkapazität und ein Wachstum von 40% im Cache im Vergleich zu seinem Vorgänger. Der Spyre Accelerator bietet zusätzliche KI-Berechnungsfähigkeit und bildet eine skalierbare Architektur zur Unterstützung der Ensemble-KI-Modellierung. Beide werden von Samsung Foundry im 5nm-Prozessnode gefertigt und sollen 2025 für Kunden verfügbar sein.

- Telum II Processor features eight high-performance cores running at 5.5GHz, with 36MB L2 cache per core

- 40% increase in on-chip cache capacity for a total of 360MB

- Integrated AI accelerator provides a fourfold increase in compute capacity per chip over the previous generation

- New I/O Acceleration Unit DPU improves data handling with a 50% increased I/O density

- Spyre Accelerator offers up to 1TB of memory and 32 compute cores per chip

- Designed to consume no more than 75W per card, addressing energy efficiency concerns

- None.

Insights

IBM's upcoming Telum II Processor and Spyre Accelerator represent significant advancements in AI-focused mainframe computing. The Telum II boasts impressive specs: 8 cores at 5.5GHz, 36MB L2 cache per core and a 40% increase in on-chip cache. Its integrated AI accelerator offers 4x compute capacity over its predecessor, important for real-time AI inferencing.

The Spyre Accelerator, designed for complex AI models, supports up to 1TB of memory and features 32 compute cores with various datatypes. This combination enables ensemble AI, merging traditional models with LLMs for potentially more accurate results. The focus on power efficiency (75W per Spyre card) addresses the growing energy concerns in AI computing.

These innovations position IBM to compete in the rapidly evolving AI hardware market, particularly for enterprise-scale applications requiring high security and efficiency.

IBM's strategic move into AI-optimized mainframe systems could significantly impact its market position. The company is addressing critical challenges in enterprise AI adoption: scalability, security and energy efficiency. By integrating AI capabilities directly into their mainframe architecture, IBM is leveraging its strong enterprise customer base to potentially capture a larger share of the growing AI infrastructure market.

The expected 2025 availability of these innovations aligns with projected growth in enterprise AI adoption. However, IBM faces stiff competition from cloud providers and specialized AI chip makers. The success of this initiative will depend on IBM's ability to demonstrate clear performance and cost advantages over alternatives, particularly in handling complex, secure workloads that benefit from mainframe architecture.

IBM's focus on ensemble AI methods is a shrewd move, addressing the growing demand for more sophisticated AI solutions in enterprise settings. This approach, combining traditional models with LLMs, could provide a competitive edge in accuracy and versatility for complex business applications like fraud detection and anti-money laundering.

The emphasis on power efficiency is timely, given projections of AI's escalating energy demands. IBM's solution could appeal to organizations looking to balance AI capabilities with sustainability goals. However, the 2025 availability timeline may be a concern in the fast-moving AI landscape. IBM will need to maintain momentum and possibly offer interim solutions to prevent potential customer attrition to more immediately available alternatives in the meantime.

New IBM Telum II Processor and IBM Spyre Accelerator unlock capabilities for enterprise-scale AI, including large language models and generative AI

Advanced I/O technology enables and simplifies a scalable I/O sub-system designed to reduce energy consumption and data center footprint

With many generative AI projects leveraging Large Language Models (LLMs) moving from proof-of-concept to production, the demands for power-efficient, secured and scalable solutions have emerged as key priorities. Morgan Stanley research published in August projects generative AI's power demands will skyrocket

The key innovations unveiled today include:

- IBM Telum II Processor: Designed to power next-generation IBM Z systems, the new IBM chip features increased frequency, memory capacity, a 40 percent growth in cache and integrated AI accelerator core as well as a coherently attached Data Processing Unit (DPU) versus the first generation Telum chip. The new processor is expected to support enterprise compute solutions for LLMs, servicing the industry's complex transaction needs.

- IO acceleration unit: A completely new Data Processing Unit (DPU) on the Telum II processor chip is engineered to accelerate complex IO protocols for networking and storage on the mainframe. The DPU simplifies system operations and can improve key component performance.

- IBM Spyre Accelerator: Provides additional AI compute capability to complement the Telum II processor. Working together, the Telum II and Spyre chips form a scalable architecture to support ensemble methods of AI modeling – the practice of combining multiple machine learning or deep learning AI models with encoder LLMs. By leveraging the strengths of each model architecture, ensemble AI may provide more accurate and robust results compared to individual models. The IBM Spyre Accelerator chip, previewed at the Hot Chips 2024 conference, will be delivered as an add on option. Each accelerator chip is attached via a 75-watt PCIe adapter and is based on technology developed in collaboration with the IBM Research. As with other PCIe cards, the Spyre Accelerator is scalable to fit client needs.

"Our robust, multi-generation roadmap positions us to remain ahead of the curve on technology trends, including escalating demands of AI," said Tina Tarquinio, VP, Product Management, IBM Z and LinuxONE. "The Telum II Processor and Spyre Accelerator are designed to deliver high-performance, secured, and more power efficient enterprise computing solutions. After years in development, these innovations will be introduced in our next generation IBM Z platform so clients can leverage LLMs and generative AI at scale."

The Telum II processor and the IBM Spyre Accelerator will be manufactured by IBM's long-standing fabrication partner, Samsung Foundry, and built on its high performance, power efficient 5nm process node. Working in concert, they will support a range of advanced AI-driven use cases designed to unlock business value and create new competitive advantages. With ensemble methods of AI, clients can achieve faster, more accurate results on their predictions. The combined processing power announced today will provide an on ramp for the application of generative AI use cases. Some examples could include:

- Insurance Claims Fraud Detection: Enhanced fraud detection in home insurance claims through ensemble AI, which combine LLMs with traditional neural networks geared for improved performance and accuracy.

- Advanced Anti-Money Laundering: Advanced detection for suspicious financial activities, supporting compliance with regulatory requirements and mitigating the risk of financial crimes.

- AI Assistants: Driving the acceleration of application lifecycle, transfer of knowledge and expertise, code explanation as well as transformation, and more.

Specifications and Performance Metrics:

Telum II processor: Featuring eight high-performance cores running at 5.5GHz, with 36MB L2 cache per core and a

The new I/O Acceleration Unit DPU is integrated into the Telum II chip. It is designed to improve data handling with a

Spyre Accelerator: A purpose-built enterprise-grade accelerator offering scalable capabilities for complex AI models and generative AI use cases is being showcased. It features up to 1TB of memory, built to work in tandem across the eight cards of a regular IO drawer, to support AI model workloads across the mainframe while designed to consume no more than 75W per card. Each chip will have 32 compute cores supporting int4, int8, fp8, and fp16 datatypes for both low-latency and high-throughput AI applications.

Availability

The Telum II processor will be the central processor powering IBM's next-generation IBM Z and IBM LinuxONE platforms. It is expected to be available to IBM Z and LinuxONE clients in 2025. The IBM Spyre Accelerator, currently in tech preview, is also expected to be available in 2025.

Statements regarding IBM's future direction and intent are subject to change or withdrawal without notice, and represent goals and objectives only.

About IBM

IBM is a leading provider of global hybrid cloud and AI, and consulting expertise. We help clients in more than 175 countries capitalize on insights from their data, streamline business processes, reduce costs and gain the competitive edge in their industries. Thousands of government and corporate entities in critical infrastructure areas such as financial services, telecommunications and healthcare rely on IBM's hybrid cloud platform and Red Hat OpenShift to affect their digital transformations quickly, efficiently and securely. IBM's breakthrough innovations in AI, quantum computing, industry-specific cloud solutions and consulting deliver open and flexible options to our clients. All of this is backed by IBM's long-standing commitment to trust, transparency, responsibility, inclusivity and service.

Additional Sources

- Read more about the IBM Telum II Processor.

- Read more about the IBM Spyre Accelerator.

- Read more about the IO Accelerator

Media Contact:

Chase Skinner

IBM Communications

chase.skinner@ibm.com

Aishwerya Paul

IBM Communications

aish.paul@ibm.com

1 Source: Morgan Stanley Research, August 2024.

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/new-ibm-processor-innovations-to-accelerate-ai-on-next-generation-ibm-z-mainframe-systems-302229993.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/new-ibm-processor-innovations-to-accelerate-ai-on-next-generation-ibm-z-mainframe-systems-302229993.html

SOURCE IBM