Snowflake Expands Capabilities for Enterprises to Deliver Trustworthy AI into Production

Snowflake announced new AI capabilities at BUILD 2024 to help enterprises deliver trusted AI solutions. Key innovations include enhanced multimodal conversational app development, improved natural language processing pipelines, and Container Runtime support for ML training on distributed GPUs. The company reported that adoption of Cortex AI has more than doubled in the past six months.

New features include multimodal support for images, Microsoft SharePoint integration, document preprocessing functions, and over 20 metrics for AI app evaluation. The platform now offers expanded LLM options, serverless fine-tuning, and provisioned throughput for batch processing.

Snowflake ha annunciato nuove funzionalità di intelligenza artificiale al BUILD 2024 per aiutare le aziende a fornire soluzioni IA affidabili. Le innovazioni chiave includono lo sviluppo avanzato di app conversazionali multimodali, miglioramenti ai pipeline di elaborazione del linguaggio naturale e supporto per Container Runtime per l'addestramento di ML su GPU distribuite. L'azienda ha riportato che l'adozione di Cortex AI è più che raddoppiata negli ultimi sei mesi.

Le nuove funzionalità includono supporto multimodale per immagini, integrazione con Microsoft SharePoint, funzioni di preprocessing dei documenti e oltre 20 metriche per la valutazione delle app IA. La piattaforma ora offre opzioni LLM ampliati, fine-tuning senza server e throughput fornito per l'elaborazione in batch.

Snowflake anunció nuevas capacidades de inteligencia artificial en BUILD 2024 para ayudar a las empresas a ofrecer soluciones de IA confiables. Las innovaciones clave incluyen el desarrollo mejorado de aplicaciones conversacionales multimodales, mejoras en los pipelines de procesamiento del lenguaje natural y soporte de Container Runtime para el entrenamiento de ML en GPUs distribuidas. La compañía reportó que la adopción de Cortex AI se ha más que duplicado en los últimos seis meses.

Nuevas características incluyen soporte multimodal para imágenes, integración con Microsoft SharePoint, funciones de preprocesamiento de documentos y más de 20 métricas para la evaluación de aplicaciones de IA. La plataforma ahora ofrece opciones LLM ampliadas, ajuste fino sin servidor y rendimiento provisionado para el procesamiento por lotes.

Snowflake는 BUILD 2024에서 기업들이 신뢰할 수 있는 AI 솔루션을 제공할 수 있도록 돕기 위해 새로운 AI 기능을 발표했습니다. 주요 혁신 사항에는 향상된 멀티모달 대화형 앱 개발, 개선된 자연어 처리 파이프라인, 분산 GPU에서의 ML 학습을 위한 컨테이너 런타임 지원이 포함됩니다. 회사는 Cortex AI의 채택이 지난 6개월 동안 두 배 이상 증가했다고 보고했습니다.

새로운 기능에는 이미지에 대한 멀티모달 지원, Microsoft SharePoint 통합, 문서 전처리 기능, AI 앱 평가를 위한 20개 이상의 메트릭이 포함됩니다. 이제 플랫폼은 확장된 LLM 옵션, 서버리스 미세 조정 및 배치 처리에 대한 프로비저닝 처리량을 제공합니다.

Snowflake a annoncé de nouvelles capacités d'intelligence artificielle lors de BUILD 2024 pour aider les entreprises à fournir des solutions IA fiables. Les innovations clés incluent le développement amélioré d'applications conversationnelles multimodales, des pipelines de traitement du langage naturel améliorés et le support de Container Runtime pour la formation ML sur GPUs distribués. La société a déclaré que l'adoption de Cortex AI a plus que doublé au cours des six derniers mois.

Les nouvelles fonctionnalités incluent un support multimodal pour les images, l'intégration avec Microsoft SharePoint, des fonctions de prétraitement de documents, et plus de 20 métriques pour l'évaluation des applications IA. La plateforme propose désormais des options LLM élargies, un ajustement fin sans serveur et un débit provisionné pour le traitement par lots.

Snowflake hat auf der BUILD 2024 neue KI-Funktionen angekündigt, um Unternehmen bei der Bereitstellung vertrauenswürdiger KI-Lösungen zu unterstützen. Zu den wichtigsten Innovationen gehören verbesserte multimodale Konversations-App-Entwicklung, optimierte natürliche Sprachverarbeitungspipeline und Container Runtime Unterstützung für ML-Training auf verteilten GPUs. Das Unternehmen berichtete, dass die Nutzung von Cortex AI in den letzten sechs Monaten mehr als verdoppelt wurde.

Neue Funktionen umfassen multimodale Unterstützung für Bilder, Integration mit Microsoft SharePoint, Dokumenten-Vorverarbeitungsfunktionen und über 20 Metriken zur Bewertung von KI-Apps. Die Plattform bietet jetzt erweiterte LLM-Optionen, serverlose Feinabstimmung und bereitgestellten Durchsatz für die Batchverarbeitung.

- Cortex AI adoption more than doubled in past six months

- Launch of Container Runtime support enabling GPU-powered ML training

- Integration with Microsoft SharePoint expanding data accessibility

- Addition of over 20 metrics for AI app evaluation and monitoring

- None.

Insights

Snowflake's new AI capabilities represent a significant strategic expansion in enterprise AI solutions. The introduction of Container Runtime with GPU support and Cortex AI enhancements positions Snowflake to compete more effectively in the high-growth AI infrastructure market. Key technical advancements include multimodal support, Microsoft SharePoint integration and serverless LLM fine-tuning.

Customer testimonials demonstrate tangible business impact, with Alberta Health Services reporting a

The new GPU-powered capabilities and container support significantly reduce barriers to AI implementation, potentially accelerating enterprise AI adoption and Snowflake's market penetration. This could strengthen Snowflake's competitive position against cloud giants in the enterprise AI space.

This product expansion strategically positions Snowflake in the rapidly growing enterprise AI market. The focus on trustworthy AI and integrated governance directly addresses enterprise concerns about AI adoption, potentially accelerating sales cycles. The introduction of GPU support and container services opens new revenue streams and deepens customer lock-in.

The broad customer adoption, evidenced by testimonials from major enterprises like Bayer and TS Imagine, validates market demand. The reported doubling of Cortex AI adoption in six months suggests strong product-market fit and potential revenue acceleration. These developments could help Snowflake maintain its premium valuation multiple by expanding its total addressable market in the enterprise AI sector.

- Enterprises can now further accelerate multimodal conversational app development with more data sources and native agent-based orchestration

- Data teams can build more cost-effective, performant natural language processing pipelines with increased model choice, serverless LLM fine tuning, and provisioned throughput

- Snowflake ML now supports Container Runtime, enabling users to efficiently execute large-scale ML training and inference jobs on distributed GPUs from Snowflake Notebooks

No-Headquarters/

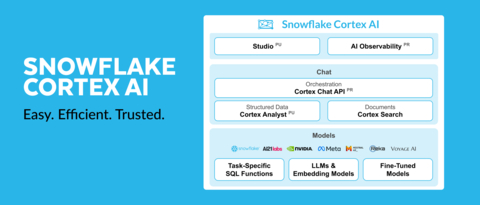

Snowflake Expands Capabilities for Enterprises to Deliver Trustworthy AI into Production (Graphic: Business Wire)

“For enterprises, AI hallucinations are simply unacceptable. Today’s organizations require accurate, trustworthy AI in order to drive effective decision-making, and this starts with access to high-quality data from diverse sources to power AI models,” said Baris Gultekin, Head of AI, Snowflake. "The latest innovations to Snowflake Cortex AI and Snowflake ML enable data teams and developers to accelerate the path to delivering trusted AI with their enterprise data, so they can build chatbots faster, improve the cost and performance of their AI initiatives, and accelerate ML development.”

Snowflake Enables Enterprises to Build High-Quality Conversational Apps, Faster

Thousands of global enterprises leverage Cortex AI to seamlessly scale and productionize their AI-powered apps, with adoption more than doubling¹ in just the past six months alone. Snowflake’s latest innovations make it easier for enterprises to build reliable AI apps with more diverse data sources, simplified orchestration, and built-in evaluation and monitoring — all from within Snowflake Cortex AI, Snowflake’s fully managed AI service that provides a suite of generative AI features. Snowflake’s advancements for end-to-end conversational app development enable customers to:

- Create More Engaging Responses with Multimodal Support: Organizations can now enhance their conversational apps with multimodal inputs like images, soon to be followed by audio and other data types, using multimodal LLMs such as Meta’s Llama 3.2 models with the new Cortex COMPLETE Multimodal Input Support (private preview soon).

- Gain Access to More Comprehensive Answers with New Knowledge Base Connectors: Users can quickly integrate internal knowledge bases using managed connectors such as the new Snowflake Connector for Microsoft SharePoint (now in public preview), so they can tap into their Microsoft 365 SharePoint files and documents, automatically ingesting files without having to manually preprocess documents. Snowflake is also helping enterprises chat with unstructured data from third parties — including news articles, research publications, scientific journals, textbooks, and more — using the new Cortex Knowledge Extensions on Snowflake Marketplace (now in private preview). This is the first and only third-party data integration for generative AI that respects the publishers’ intellectual property through isolation and clear attribution. It also creates a direct pathway to monetization for content providers.

- Achieve Faster Data Readiness with Document Preprocessing Functions: Business analysts and data engineers can now easily preprocess data using short SQL functions to make PDFs and other documents AI-ready through the new PARSE_DOCUMENT (now in public preview) for layout-aware document text extraction and SPLIT_TEXT_RECURSIVE_CHARACTER (now in private preview) for text chunking functions in Cortex Search (now generally available).

- Reduce Manual Integration and Orchestration Work: To make it easier to receive and respond to questions grounded on enterprise data, developers can use the Cortex Chat API (public preview soon) to streamline the integration between the app front-end and Snowflake. The Cortex Chat API combines structured and unstructured data into a single REST API call, helping developers quickly create Retrieval-Augmented Generation (RAG) and agentic analytical apps with less effort.

- Increase App Trustworthiness and Enhance Compliance Processes with Built-in Evaluation and Monitoring: Users can now evaluate and monitor their generative AI apps with over 20 metrics for relevance, groundedness, stereotype, and latency, both during development and while in production using AI Observability for LLM Apps (now in private preview) — with technology integrated from TruEra (acquired by Snowflake in May 2024).

- Unlock More Accurate Self-Serve Analytics: To help enterprises easily glean insights from their structured data with high accuracy, Snowflake is announcing several improvements to Cortex Analyst (in public preview), including simplified data analysis with advanced joins (now in public preview), increased user friendliness with multi-turn conversations (now in public preview), and more dynamic retrieval with a Cortex Search integration (now in public preview).

Snowflake Empowers Users to Run Cost-Effective Batch LLM Inference for Natural Language Processing

Batch inference allows businesses to process massive datasets with LLMs simultaneously, as opposed to the individual, one-by-one approach used for most conversational apps. In turn, NLP pipelines for batch data offer a structured approach to processing and analyzing various forms of natural language data, including text, speech, and more. To help enterprises with both, Snowflake is unveiling more customization options for large batch text processing, so data teams can build NLP pipelines with high processing speeds at scale, while optimizing for both cost and performance.

Snowflake is adding a broader selection of pre-trained LLMs, embedding model sizes, context window lengths, and supported languages to Cortex AI, providing organizations increased choice and flexibility when selecting which LLM to use, while maximizing performance and reducing cost. This includes adding the multi-lingual embedding model from Voyage, multimodal 3.1 and 3.2 models from Llama, and huge context window models from Jamba for serverless inference. To help organizations choose the best LLM for their specific use case, Snowflake is introducing Cortex Playground (now in public preview), an integrated chat interface designed to generate and compare responses from different LLMs so users can easily find the best model for their needs.

When using an LLM for various tasks at scale, consistent outputs become even more crucial to effectively understand results. As a result, Snowflake is unveiling the new Cortex Serverless Fine-Tuning (generally available soon), allowing developers to customize models with proprietary data to generate results with more accurate outputs. For enterprises that need to process large inference jobs with guaranteed throughput, the new Provisioned Throughput (public preview soon) helps them successfully do so.

Customers Can Now Expedite Reliable ML with GPU-Powered Notebooks and Enhanced Monitoring

Having easy access to scalable and accelerated compute significantly impacts how quickly teams can iterate and deploy models, especially when working with large datasets or using advanced deep learning frameworks. To support these compute-intensive workflows and speed up model development, Snowflake ML now supports Container Runtime (now in public preview on AWS and public preview soon on Microsoft Azure), enabling users to efficiently execute distributed ML training jobs on GPUs. Container Runtime is a fully managed container environment accessible through Snowflake Notebooks (now generally available) and preconfigured with access to distributed processing on both CPUs and GPUs. ML development teams can now build powerful ML models at scale, using any Python framework or language model of their choice, on top of their Snowflake data.

“As an organization connecting over 700,000 healthcare professionals to hospitals across the US, we rely on machine learning to accelerate our ability to place medical providers into temporary and permanent jobs. Using GPUs from Snowflake Notebooks on Container Runtime turned out to be the most cost-effective solution for our machine learning needs, enabling us to drive faster staffing results with higher success rates,” said Andrew Christensen, Data Scientist, CHG Healthcare. “We appreciate the ability to take advantage of Snowflake's parallel processing with any open source library in Snowflake ML, offering flexibility and improved efficiency for our workflows.”

Organizations also often require GPU compute for inference. As a result, Snowflake is providing customers with new Model Serving in Containers (now public preview on AWS). This enables teams to deploy both internally and externally-trained models, including open source LLMs and embedding models, from the Model Registry into Snowpark Container Services (now generally available on AWS and Microsoft Azure) for production using distributed CPUs or GPUs — without complex resource optimizations.

In addition, users can quickly detect model degradation in production with built-in monitoring with the new Observability for ML Models (now in public preview), which integrates technology from TruEra to monitor performance and various metrics for any model running inference in Snowflake. In turn, Snowflake’s new Model Explainability (now in public preview) allows users to easily compute Shapley values — a widely-used approach that helps explain how each feature is impacting the overall output of the model — for models logged in the Model Registry. Users can now understand exactly how a model is arriving at its final conclusion, and detect model weaknesses by noticing unintuitive behavior in production.

Supporting Customer Quotes:

-

Alberta Health Services: “As Alberta’s largest integrated health system, our emergency rooms get nearly 2 million visits per year. Our physicians have always needed to manually type up patient notes after each visit, requiring them to spend lots of time on administrative work,” said Jason Scarlett, Executive Director, Enterprise Data Engineering, Data & Analytics, Alberta Health Services. “With Cortex AI, we are testing a new way to automate this process through an app that records patient interactions, transcribes, and then summarizes them, all within Snowflake’s protected perimeter. It’s being used by a handful of emergency department physicians, who are reporting a 10

-15% increase in the number of patients seen per hour — that means we can create less-crowded waiting rooms, relief from overwhelming amounts of paperwork for doctors, and even better-quality notes.” - Bayer: “As one of the largest life sciences companies in the world, it’s critical that our AI systems consistently deliver accurate, trustworthy insights. This is exactly what Snowflake Cortex Analyst enables for us," said Mukesh Dubey, Product Management and Architecture Lead, Bayer. "Cortex Analyst provides high-quality responses to natural language queries on structured data, which our team now uses in an operationally sustainable way. What I’m most excited about is that we're just getting started, and we're looking forward to unlocking more value with Snowflake Cortex AI as we accelerate AI adoption across our business.”

- Coda: “Snowflake Cortex AI forms all the core building blocks of constructing a scalable, secure AI system,” said Shishir Mehrotra, Co-founder and CEO, Coda. “Coda Brain uses almost every component in this stack: The Cortex Search engine that can vectorize and index unstructured and structured data. Cortex Analyst, which can magically turn natural language queries into SQL. The Cortex LLMs that do everything from interpreting queries to reformatting responses into human-readable responses. And, of course, the underlying Snowflake data platform, which can scale and securely handle the huge volumes of data being pulled into Coda Brain.”

- Compare Club: “At Compare Club, our mission is to help Australian consumers make more informed purchasing decisions across insurance, energy, home loans, and more, making it easier and faster for customers to sign up for the right products and maximize their budgets,” said Ryan Newsome, Head of Data and Analytics, Compare Club. “Snowflake Cortex AI has been instrumental in enabling us to efficiently analyze and summarize hundreds of thousands of pages of call transcript data, providing our teams with deep insights into customer goals and behaviors. With Cortex AI, we can securely harness these insights to deliver more tailored, effective recommendations, enhancing our members' overall experience and ensuring long-term loyalty.”

- Markaaz: “Snowflake Cortex Search has transformed the way we handle unstructured data by providing our customers with up-to-date, real-time firmographic information. We needed a way to search through millions of records that update continuously, and Cortex Search makes this possible with its powerful hybrid search capabilities,” said Rustin Scott, VP of Data Technology, Markaaz. “Snowflake further helps us deliver high-quality search results seconds to minutes after ingestion, and powers research and generative AI applications allowing us and our customers to realize the potential of our comprehensive global datasets. With fully managed infrastructure and Snowflake-grade security, Cortex Search has become an indispensable tool in our enterprise AI toolkit."

- Osmose Utility Services: “Osmose exists to make the electric and communications grid as strong, safe, and resilient as the communities we serve,” said John Cancian, VP of Data Analytics, Osmose Utilities Services. "After establishing a standardized data and AI framework with Snowflake, we're now able to quickly deliver net-new use cases to end users in as little as two weeks. We've since deployed Document AI to extract unstructured data from over 100,000 pages of text from various contracts, making it accessible for our users to ask insightful questions with natural language using a Streamlit chatbot that leverages Cortex Search."

- Terakeet: “Snowflake Cortex AI has changed how we extract insights from our data at scale using the power of advanced LLMs, accelerating our critical marketing and sales workflows,” said Jennifer Brussow, Director of Data Science, Terakeet. “Our teams can now quickly and securely analyze massive data sets, unlocking strategic insights to better serve our clients and accurately estimate our total addressable market. We’ve reduced our processing times from 48 hours to just 45 minutes with the power of Snowflake’s new AI features. All of our marketing and sales operations teams are using Cortex AI to better serve clients and prospects.”

-

TS Imagine: “We exclusively use Snowflake for our RAGs to power AI within our data management and customer service teams, which has been game changing. Now we can design something on a Thursday, and by Tuesday it’s in production,” said Thomas Bodenski, COO and Chief Data and Analytics Officer, TS Imagine. “For example, we replaced an error-prone, labor-intensive email sorting process to keep track of mission-critical updates from vendors with a RAG process powered by Cortex AI. This enables us to delete duplicates or non-relevant emails, and create, assign, prioritize, and schedule JIRA tickets, saving us over 4,000+ hours of manual work each year and nearly

30% on costs compared to our previous solution.”

Learn More:

- Read more about how Snowflake is making it faster and easier to build and deploy generative AI applications on enterprise data in this blog post.

- Learn how industry-leaders like Bayer and Siemens Energy use Cortex AI to increase revenue, improve productivity, and better serve end users in this Secrets of Gen AI Success eBook.

- Join us at Snowflake’s virtual RAG ’n’ Roll Hackathon where developers can get hands-on with Snowflake Cortex AI to build RAG apps. Register for the hackathon here.

- Explore how users can easily harness the power of containers to run ML workloads at scale using CPUs or GPUs from Snowflake Notebooks in Container Runtime through this quickstart.

- See how users can quickly spin up a Snowflake Notebook and train an XGBoost model using GPUs in Container Runtime in this video.

- Check out all the innovations and announcements coming out of BUILD 2024 on Snowflake’s Newsroom.

- Stay on top of the latest news and announcements from Snowflake on LinkedIn and X.

¹As of October 31, 2024.

Forward Looking Statements

This press release contains express and implied forward-looking statements, including statements regarding (i) Snowflake’s business strategy, (ii) Snowflake’s products, services, and technology offerings, including those that are under development or not generally available, (iii) market growth, trends, and competitive considerations, and (iv) the integration, interoperability, and availability of Snowflake’s products with and on third-party platforms. These forward-looking statements are subject to a number of risks, uncertainties and assumptions, including those described under the heading “Risk Factors” and elsewhere in the Quarterly Reports on Form 10-Q and the Annual Reports on Form 10-K that Snowflake files with the Securities and Exchange Commission. In light of these risks, uncertainties, and assumptions, actual results could differ materially and adversely from those anticipated or implied in the forward-looking statements. As a result, you should not rely on any forward-looking statements as predictions of future events.

© 2024 Snowflake Inc. All rights reserved. Snowflake, the Snowflake logo, and all other Snowflake product, feature and service names mentioned herein are registered trademarks or trademarks of Snowflake Inc. in

About Snowflake

Snowflake makes enterprise AI easy, efficient and trusted. Thousands of companies around the globe, including hundreds of the world’s largest, use Snowflake’s AI Data Cloud to share data, build applications, and power their business with AI. The era of enterprise AI is here. Learn more at snowflake.com (NYSE: SNOW).

View source version on businesswire.com: https://www.businesswire.com/news/home/20241112275545/en/

Kaitlyn Hopkins

Senior Product PR Lead, Snowflake

press@snowflake.com

Source: Snowflake Inc.